Improving Munin SNMP Performance

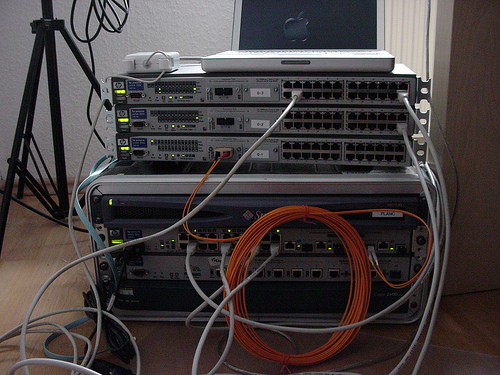

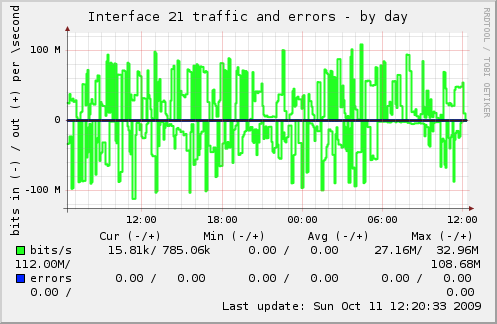

Last weekend I configured a server with Munin to monitor some resources on a network and one task was to monitor individual switch ports for bandwidth and errors. Munin has some bundled SNMP plugins for this task which work fine as long as the number of monitored devices/ports is rather low.

The Problem

Using the standard Munin SNMP plugins to graph bandwidth and errors of a given Interface of a remote (SNMP-enabled) device makes up for 4 SNMP connections per Interface. My test-network consisted of 99 monitored switch ports and this is where the problems started ...

Monitoring only a few ports is no problem but monitoring 4 values of 99 ports each gives me nearly 400 SNMP connections per monitoring-interval. The cost of a SNMP connections seems to be rather high and so it is not very efficient to make a new connection for every collected value (as Munin does it). The monitoring server (Sun Netra) needed nearly 9 minutes to collect all data but Munin needs the data every 5 minutes to build it's graphs correctly.

A Solution

A simple solution was to replace the SNMP plugin with a rewritten version, which does only one SNMP connection to collect all 4 values and therefor reducing the connection and the load on the monitoring server by a factor of 4. With the new plugin the whole data could be collected in about 2 minutes, fast enough for Munin's hardcoded 5 minute interval.

Without further ado here is the SNMP plugin I hacked together to be able to monitor more than a few interfaces with Munin:

#! /usr/bin/env python # # SNMP plugin for Munin written and (c) by Arne Brodowski # Use it as a drop-in replacement for the bundled snmp__if_ and snmp__if_err_ # plugins. # Feel free to redistribute this code under the terms of the new BSD license. import os import sys import re import subprocess community = os.environ.get('community', 'public') m = re.match(r'(.*)snmp_(?P<host>[^_]*)_if_(?P<if>[\d]*)', sys.argv[0]) conf = m.groupdict() conf.update({'community': community}) try: if(sys.argv[1] == "config"): print "host_name %(host)s" % conf print "graph_title Interface %(if)s traffic and errors" % conf print "graph_order recv send errin errout" print "graph_args --base 1000" print "graph_vlabel bits in (-) / out (+) per \${graph_period}" print "graph_category network" print "graph_info This graph shows traffic and errors for interface %(if)s." % conf print "send.info Bits sent/received by this interface."; print "recv.label recv bps" print "recv.type DERIVE" print "recv.cdef recv,8,*" print "recv.max 2000000000" print "recv.min 0" print "send.label bits/s" print "send.type DERIVE" print "send.negative recv" #that's why recv doesn't has it's own label print "send.cdef send,8,*" print "send.max 2000000000" print "send.min 0" print "errout.info Bits unsuccessfully sent/received by this interface." print "errin.label err in" print "errin.type DERIVE" print "errin.cdef errin,8,*" print "errin.max 2000000000" print "errin.min 0" print "errout.label errors" print "errout.type DERIVE" print "errout.negative errin" print "errout.cdef errout,8,*" print "errout.max 2000000000" print "errout.min 0" sys.exit(0) except IndexError: pass cmd = ["snmpget", "-v","2c", "-c","%(community)s"%conf, "-O","v", "%(host)s"%conf, "1.3.6.1.2.1.2.2.1.10.%(if)s"%conf, "1.3.6.1.2.1.2.2.1.16.%(if)s"%conf, "1.3.6.1.2.1.2.2.1.14.%(if)s"%conf, "1.3.6.1.2.1.2.2.1.20.%(if)s"%conf, ] out = subprocess.Popen(cmd, stdout=subprocess.PIPE).communicate()[0] for x in zip(('recv', 'send', 'errin', 'errout'), out.splitlines()): print "%s.value %s"%(x[0], x[1].split()[1])

Without doubt this can be improved but it works for me (TM) and I share it in the hope that others can solve their problems with it. The code is pretty verbose to make it easy to adapt to other use-cases.

Conclusion

Munin's bundled SNMP plugins are not bad, they are flexible and offer a auto-configure mechanism. For a low number of monitored devices/interfaces they work good. The problems I encountered start once you monitor more devices than Munin can query in it's 5 minute interval. The solution above is meant to replace the Munin SNMP plugin in a already configured Munin-Node instance and you should know why you need to replace it.

It has a reason that tools like Cacti and MRTG exist because they have highly optimzed SNMP data collectors wich can cope with a much higher number of devices/interfaces than Munin with it's "one plugin for one monitored datasource" approach.

Update

Please see my Wiki Page for further information about the plugin.

Thanks a lot, your code has certainly saved me some work -- mostly because I need several extensions and didn't want to touch Perl ;)

Geschrieben von Christian Hoffmann 1 Jahr, 4 Monate nach Veröffentlichung des Blog-Eintrags am 27. Feb. 2011, 23:58. Antworten